Research

I co-direct ALPS (Albany Lab for Privacy & Security) in the Computer Science Department.

My research interests revolve around security, privacy, and trust in computing environments. I am interested in developing theories and mechanisms for protecting information in complex systems such as online social networks and for enabling data sharing while preserving user privacy. In addition to the theories in the security domain, I am inspired by the work in formal methods, knowledge representation and reasoning, data mining, and information system design among others.

More specific areas of interest include

- Theoretical models and mechanisms for specification, verification, analysis, and testing of access control policies

- Privacy in online social networks

- Privacy-preserving data sharing and mining of complex data such as graphs

- Privacy-enhancing technologies in domains such as web and location-based services

- Detecting and countering social engineering attacks

Research Projects

The following lists some of the ongoing and past projects.

Black-Box Learning of Authorization Policies

A widely common application-level vulnerability is missing or misconfigured authorization policies. Authorization policies are responsible for ensuring that different application users are only allowed to access resources to which they are supposed to have access. The major problem is that most applications do not even provide an adequate specification of their authorization policies. Therefore, approach such as developing verification techniques are impractical for detecting such vulnerabilities. Our ultimate goal in this project is to learn authorization policies from applications by observing their authorization behavior and without relying on having access to their complete code and other internal complexities. Such learned policies can then be used for further analysis of privacy and security of applications. This project has been recently funded through an NSF CAREER award.

Detecting and Countering Social Engineering Attacks

Our computing systems have become significantly secure nowadays. Therefore, attackers have turned to compromising a typically weaker (less secure) element of our systems: humans. Through social engineering techniques, human users can be persuaded to help attackers achieve their goals, be it by supplying their authentication credentials or divulging sensitive corporate information. We develop intelligent solutions to detect and counter social engineering attacks based on machine learning and natural language processing techniques. Our collaborative project PANACEA: Personalized AutoNomous Agents Countering Social Engineering Attacks has been funded by DARPA.

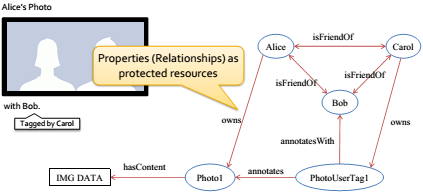

Privacy Control in Online Social Networks

Online social networks (OSNs), such as Facebook, operate using various information resources related to their users, which are potentially privacy-sensitive. Protecting information in such an environment is challenging due to interconnected nature of information objects and users, and the fact that both users and the system should be able to specify authorization policies for data access. I study specification, enforcement, and analysis of privacy control policies in OSNs.

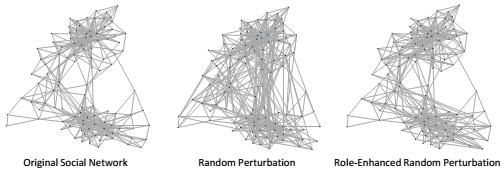

Anonymizing Social Network Datasets

Study of social networks is growing in different domains such as academia, business, and even government, in order to identify interesting patterns at either the node or network levels. In many social network datasets, the exact identity of the involved people does not matter to the purpose of the study. Yet such datasets may carry sensitive information, and hence adequate measures should be in place to ensure protection against reidentification. Recent work in the literature has shown that structural patterns can assist in reidentification attacks on naively-anonymized social networks. Consequently, there have been proposals to anonymize networks in terms of structure to avoid such attacks. However, such methods usually introduce a large amount of distortion to the social network datasets, thus, raising serious questions about their utility for useful social network analysis. My research focuses on improving anonymization methods in terms of utility without sacrificing the privacy guarantees.

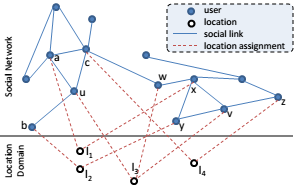

Anonymizing Location-Rich Data

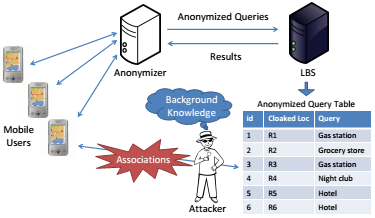

Many systems collect and leverage location information and movement traces today, ranging from search engines that retrieve results relevant to your location to OSNs for sharing for explicitly sharing your location such as Foursquare. However, your whereabouts can reveal a lot about you. An adversary may reidentify you in a location-rich dataset based on your location even if data is anonymized. Also, you may be tracked once your identity is exposed to an adversary. I have explored preserving user privacy in two areas: anonymizing location-based queries that are submitted to Location-Based Services (LBSs), and anonymizing datasets collected by geosocial networking systems (GSNSs). I propose safe notions of anonymity for LBSs unlike many approaches in the literature. I also propose notions of anonymity in geosocial networks based on not only a user’s location but also the location of her friends.

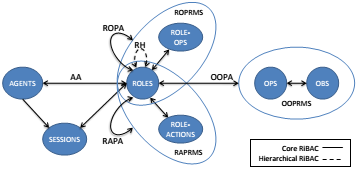

Access Control and Secure Interoperation in Modern Information Environments

Modern information environments introduce challenging requirements for security and privacy. With a group of my colleagues, we have explored security issues in multi-agent systems and have proposed an access control model to secure interactions among agents. I have also studied secure interoperation in multi-domain environments, and proposed a secure interoperation framework that guarantees enforcing time and separation of duty access constraints across domains. I have also studied modelling and enforcing privacy policies in corporate access control policies.