Hey, Can You All Look Here?

Bias-aware Gaze Uniformity Assessment in Group Images

Key Contributors: Omkar Kulkarni and Pradeep K. Atrey

PROBLEM:

Do you capture photos in group settings? => Yes!

Are there multiple individuals taking pictures of the group? => Often!

Do all members of the group face the same camera or gaze in the same direction? => Aahhh... No!

SOLUTION:

Don't worry, we have GARGI for you!

Research Objective

Since the advent of the smartphone, the number of group images taken every day is rising exponentially. A group image consists of more than one person in it. While taking the group picture, photographers usually struggle to make sure that every person looks in the same direction, typically toward the camera. This occurs more often when multiple photographers take pictures of the same group. The direction in which a person looks is called the gaze. Gaze uniformity in group images is an important criterion to determine their aesthetic quality. The objective of this research is to invent a method to achieve uniform gazes among individuals in group photographs to enhance overall aesthetic quality.

Contributions

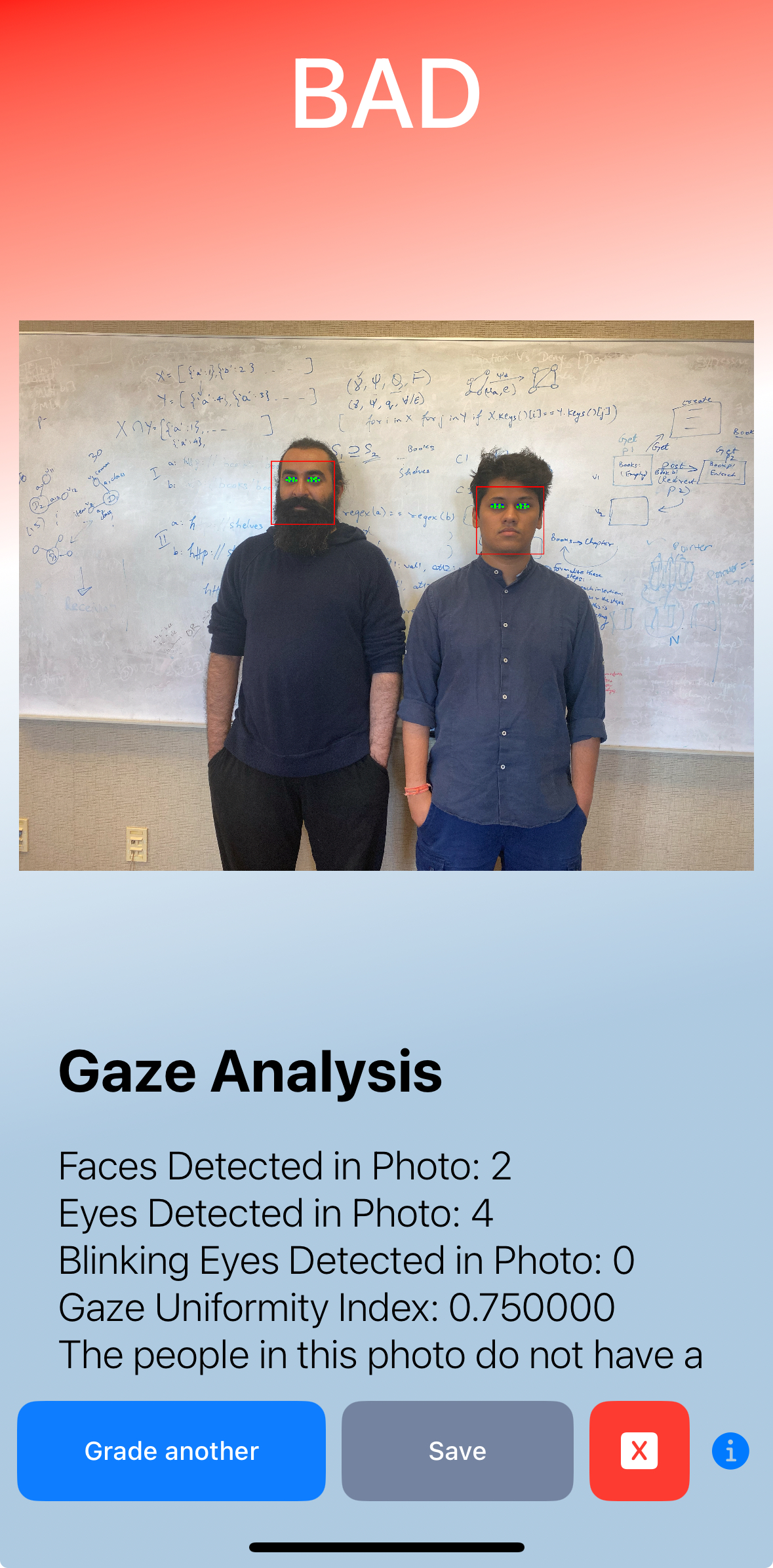

To address the problem of gaze nonuniformity in group images, we propose novel techniques to detect and correct, if needed, the gaze uniformity in group images in a bias-aware manner. Our proposed method, GARGI (Gaze-Aware Representative Group Image), uses AI algorithms to select group images with optimal gaze uniformity. Unlike existing mechanisms, such as those in Apple iPhones, which overlook gaze considerations, especially in group settings, GARGI prioritizes minimizing gaze deviation for each subject relative to their expected gaze directions. In addition, the proposed gaze uniformity detection framework and underlying algorithms are also audited for gender bias.

Demo

|

|

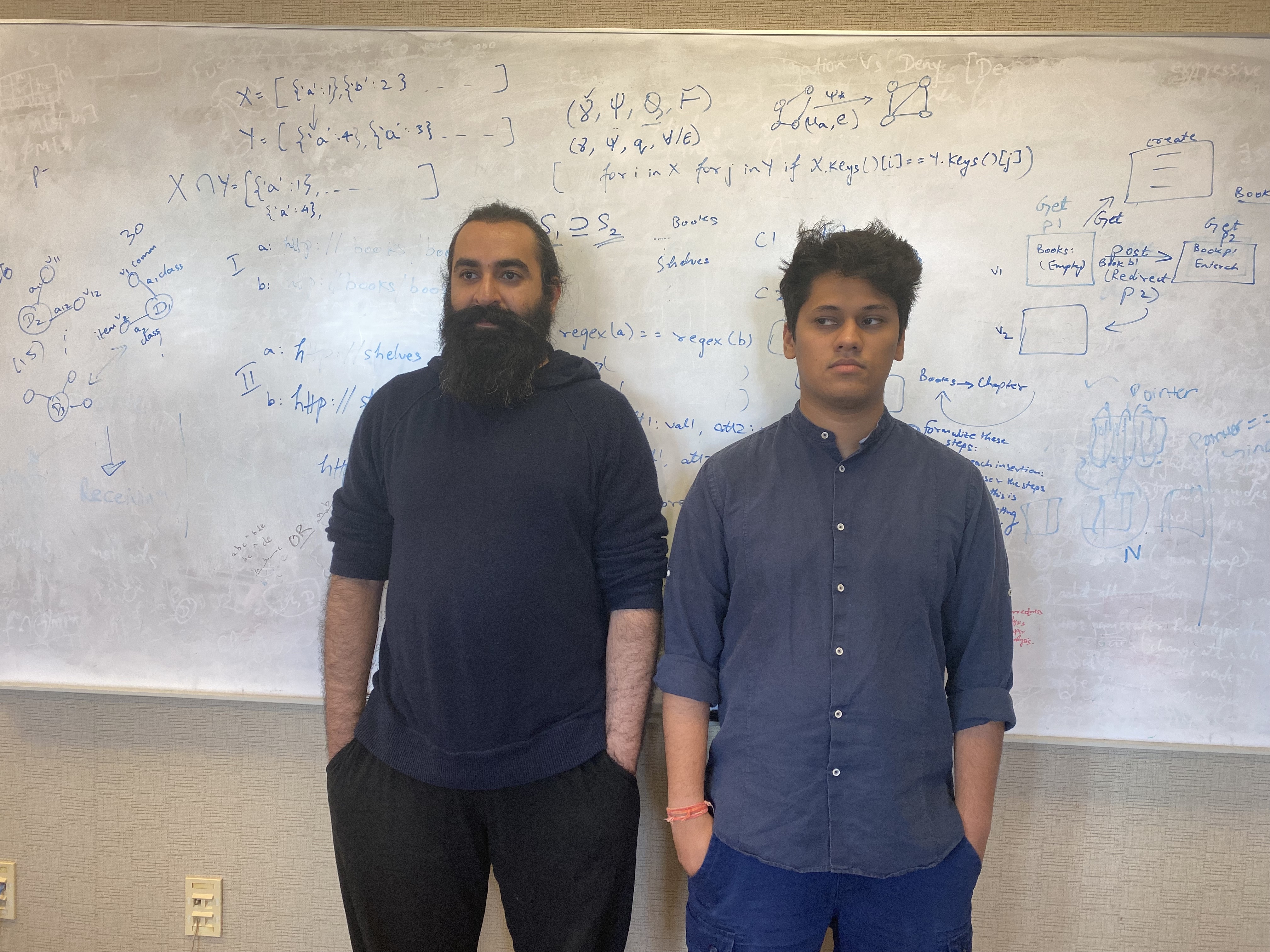

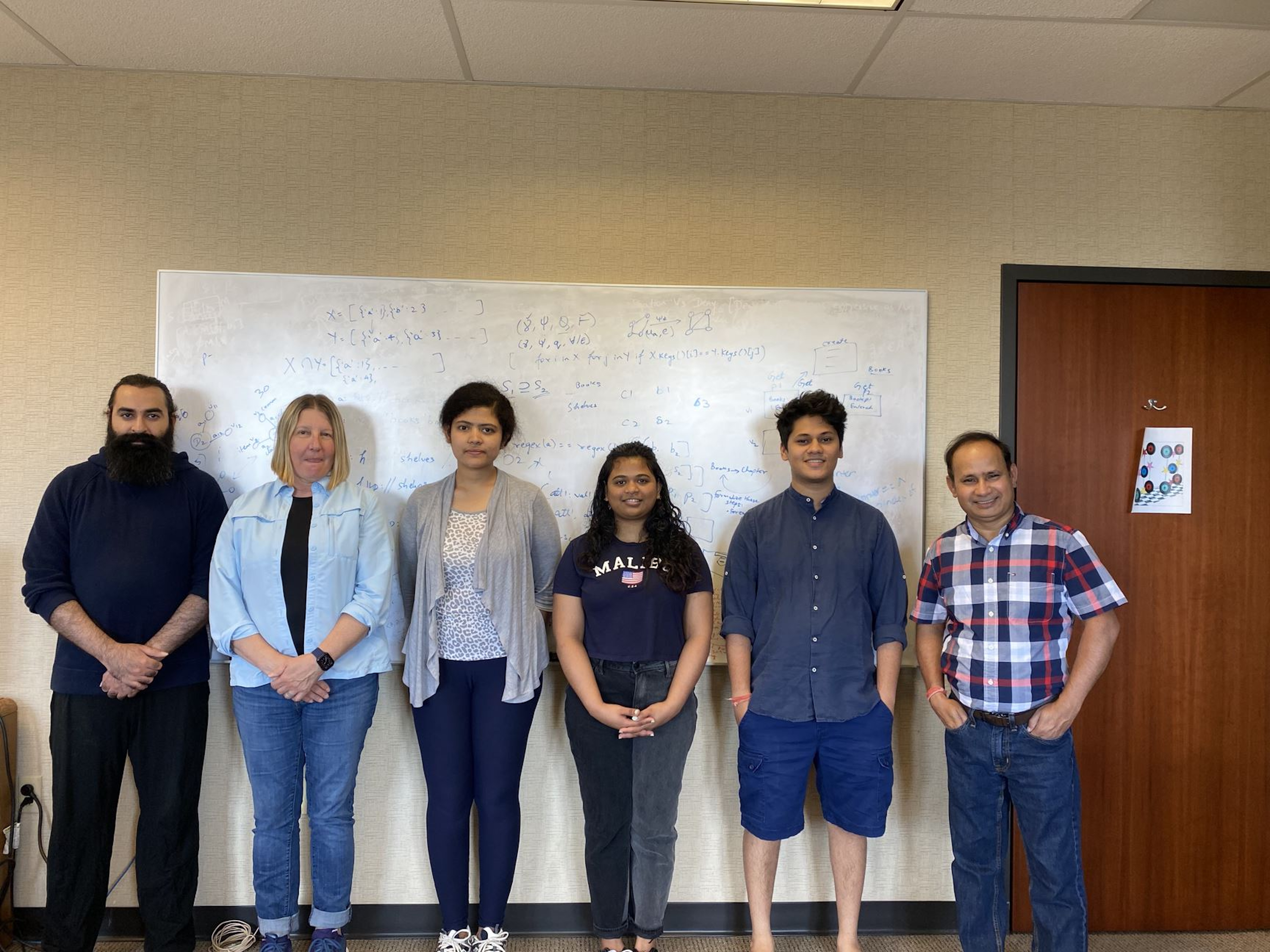

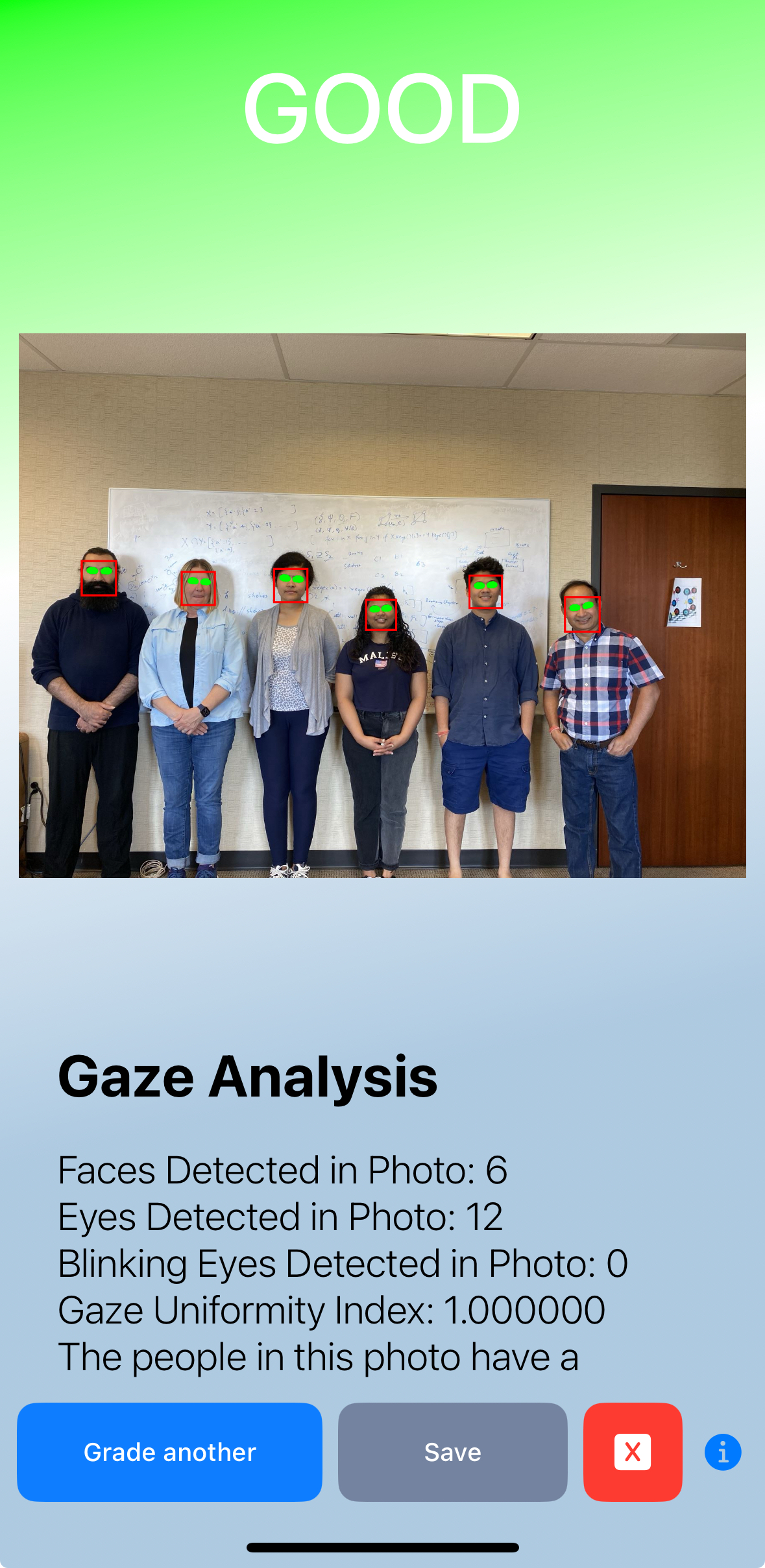

| A sample photo consisting of two people with different gazes (no gaze uniformity) | A sample photo consisting of six people with uniform gazes |

|

|

|

| Main screen of our app. | Output of our app corresponding to the input image shown above-left. | Output of our app corresponding to the input image shown above-right. |

Related Publications

-

O. Kulkarni, A. Mishra, S. Arora, V. K. Singh, and P. K. Atrey. LivePics-24: A multi-person, multi-camera, multi-settings live photos dataset. MIPR'24: IEEE International Conference on Multimedia Information Processing ad Retrieval, San Jose, CA, USA, August 2024.

-

O. Kulkarni, T. Lloyd-Jones, M. Tran, G. Vincent Jr, V. K. Singh, and P. K. Atrey. Where you look matters in group photos: A demo of GARGI iOS app. MIPR'24: IEEE International Conference on Multimedia Information Processing ad Retrieval, San Jose, CA, USA, August 2024. Poster

-

O. Kulkarni, S. Arora, A. Mishra, V. K. Singh, and P. K. Atrey. A multi-stage bias reduction framework for eye gaze detection. MIPR'23: IEEE International Conference on Multimedia Information Processing ad Retrieval, Singapore, August 2023.

-

O. Kulkarni, S. Arora and P. K. Atrey. GARGI: Selecting gaze-aware representative group image from a live photo. The 5th IEEE International Conference on Multimedia Information Processing and Retrieval (MIPR'2022), San Jose, USA, August 2022.

-

O. Kulkarni, V. Patil, V. K. Singh and P. K. Atrey. Accuracy and fairness in pupil detection algorithm. IEEE International Conference on Big Multimedia (BigMM'2021), Taichung, Taiwan, November 2021.

-

O. Kulkarni, V. Patil, S. Parikh, S. Arora and P. K. Atrey. Can you all look here? Towards determining gaze uniformity in group images. IEEE International Symposium on Multimedia (ISM'2020), Tapei, Taiwan, December 2020.

Data Set

For this research, we used our own dataset, called LivePics-24. The images considered in the work include: i) multiple group images taken by a single photographer in instant mode, and in live mode (the mode typically available in Apple iPhone), and ii) one or more group images taken by more than one photographer in instant as well as live mode. The dataset has been released for the use of researchers, and can be found here.

People

The other individuals who have contributed to various aspects of this work are: Vivek K. Singh, Shashank Arora, Vikram Patil, Shivam Parikh, Aryan Mishra, Gregory Vincent, My Tran, and Thomas Llyod-Jones.

Last updated: September 4, 2024.