Albany Lab for Privacy and Security (ALPS)

- Projects led by Prof. Pradeep K. Atrey

"Multimodal AI Meets Security, Privacy, Trust, and Fairness"

My research is fundamentally guided by the principle of "Science in Service to Society."

For nearly two decades, I have had the privilege of dedicating myself to a mission of serving society by making impactful scientific contributions at the intersection of cybersecurity, privacy, trust, and fairness, with a focus on multimodal data.

"The key to solving a problem lies in understanding it."

[

People] [

Presentations]

Research Projects

Cybersecurity and Forensics

Do you encounter cyberattacks such as email phishing? => Yes. We have solutions for you!

This research focuses on detecting and preventing phishing attacks by leveraging lightweight search features and secure authentication techniques to improve browser security. The approach includes a system that employs efficient search methods for phishing detection and a Bluetooth Low Energy (BLE) authentication scheme to safeguard against phishing attempts through browser extensions. Furthermore, the study investigates the use of volatile memory forensics for real-time phishing detection. More details can be found here.

|

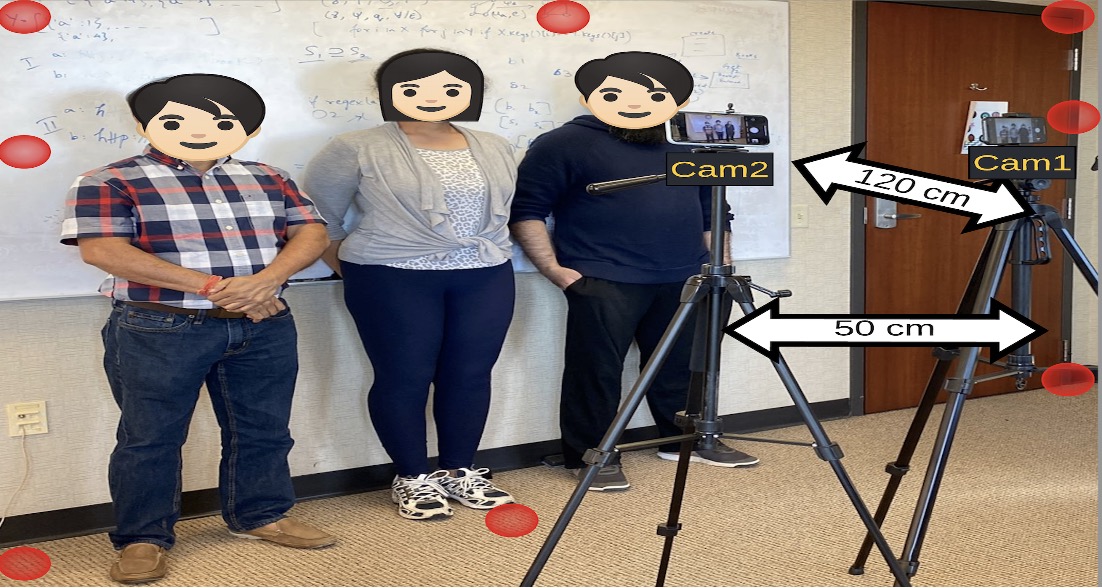

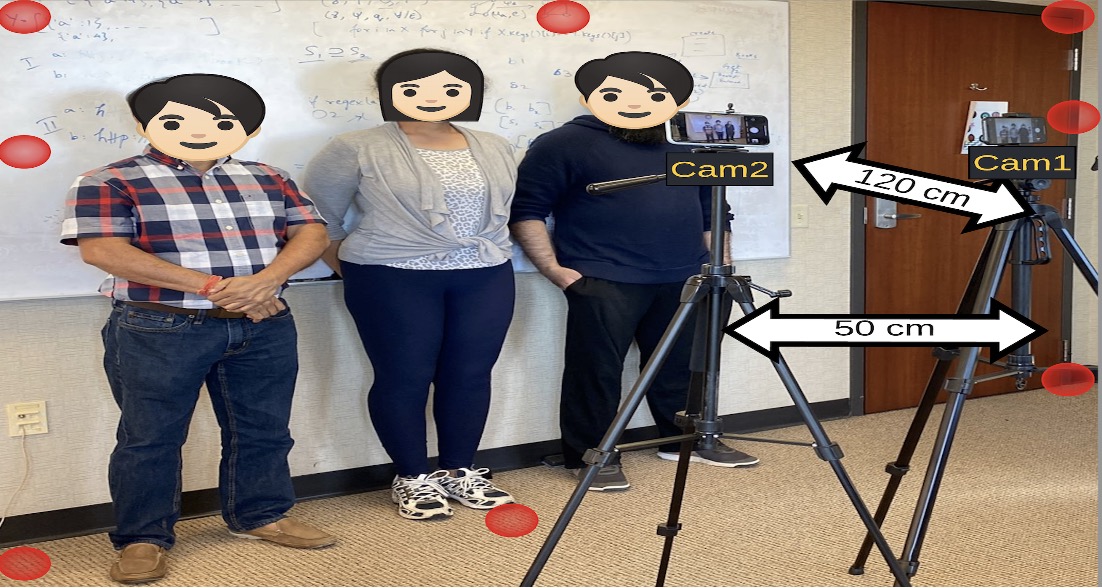

Bias-aware Gaze Uniformity Assessment in Group Images

Do you capture photos in group settings? => Yes. Are there multiple individuals taking pictures of the group? => Often. Do all members of the group face the same camera or gaze in the same direction? => Aahhh... No. Don't worry, we have GARGI for you!

Since the advent of the smartphone, the number of group images taken every day is rising exponentially. A group image consists of more than one person in it. While taking the group picture, photographers usually struggle to make sure that every person looks in the same direction, typically toward the camera. This occurs more often when multiple photographers take pictures of the same group. The direction in which a person looks is called the gaze. Gaze uniformity in group images is an important criterion to determine their aesthetic quality. The objective of this research is to invent a method to achieve uniform gazes among individuals in group photographs to enhance overall aesthetic quality. More details can be found here.

|

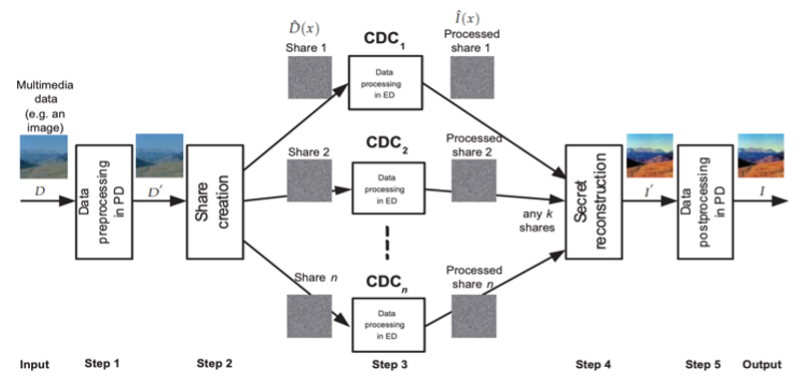

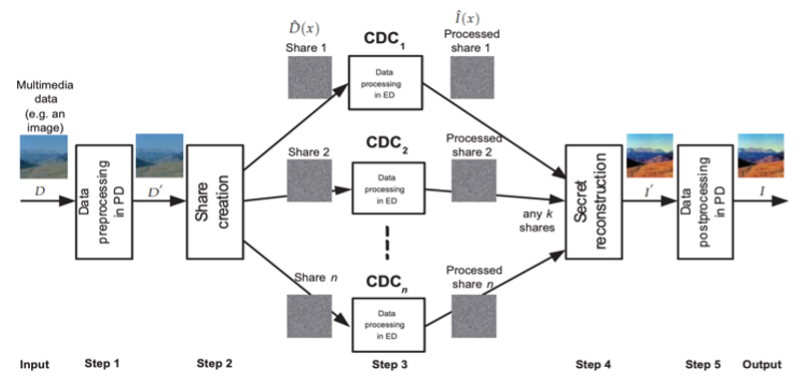

SecureCSuite: Secure Computations over Untrusted Cloud Servers

Do you use cloud servers for storing and processing your data? Your data could potentially be misused. We offer a solution that enables secure processing of encrypted data, ensuring both security and privacy.

Presently, it is a prevalent practice to delegate both small and large-scale data storage and computational responsibilities to third-party high-performance computing servers like cloud data centers. While these solutions offer highly scalable and virtualized resources for efficient service execution, concerns regarding security and privacy arise due to potential untrustworthiness of these third-party service providers. In this context, this project introduces a framework addressing secure cloud-based computations named the SecureCSuite framework. The primary objective of the SecureCSuite framework is to execute required tasks on encrypted data, thereby upholding the security and privacy of the data. We instantiated this framework for various tasks, including image/video scaling (SecureCScale), enhancement (SecureCEnhance), document editing (SecureCEdit), PDF merging (SecureCMerge), searching (SecureCSearch), emailing (SecureCMail), volume data visualization (SecureCVolume), and social networking (SecureCSocial). More details can be found here.

|

Privacy-aware Multimedia Surveillance for Public Safety

Are you concerned about being monitored through electronic surveillance, such as CCTV cameras? Your privacy could be at risk. No worries, we design and develop methods that offer effective automated surveillance, ensuring safety without compromising privacy.

Due to the rise in terrorism, electronic surveillance using video cameras, audio sensors, and social media has become widely used to monitor activities and behaviors. Although these surveillance technologies have proven to be highly useful from a security perspective, they have raised significant concerns among people regarding privacy safeguards. Traditional privacy methods focus on explicit identity leaks, such as facial information, but often overlook implicit channels where identity can be inferred through behavior and temporal information. This research project aims to develop effective surveillance methods that can automatically detect suspicious behaviors and actions while preserving privacy by considering both implicit and explicit channels. More details can be found here.

|

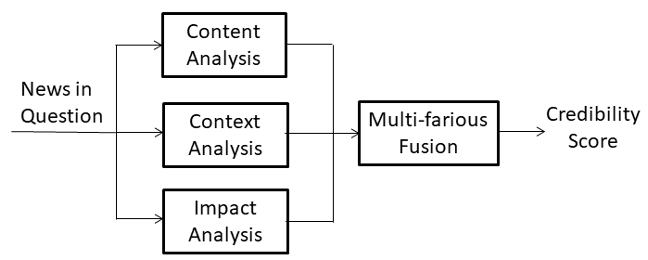

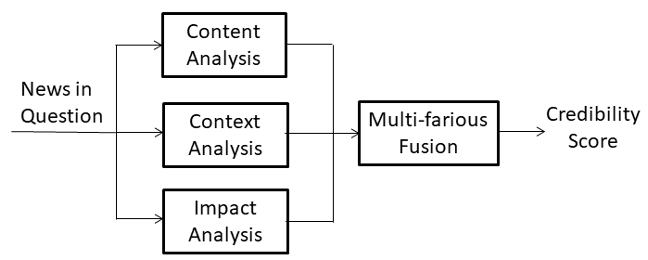

Characterizing, Verifying and Mitigating Disinformation on Social Media

Do you trust what you see on social media? => Hmmm, yes and no. Would you like to see a credibility score with each piece of media content? Of course! That's our goal—to create fact-checking tools to provide those scores!

Falsifying multimedia asset is a type of social hacking designed to change a reader’s point of view, the effect of which may lead them to make misinformed decisions. This project focuses on identifying disinformation content on media-rich social media platforms, such as Facebook and X (formerly Twitter). The goal is to invent novel methods to characterize and verify a given media-rich disinformation content on social media, and to evaluate the social acceptance of such disinformation for developing mitigation strategies. More details can be found here.

|

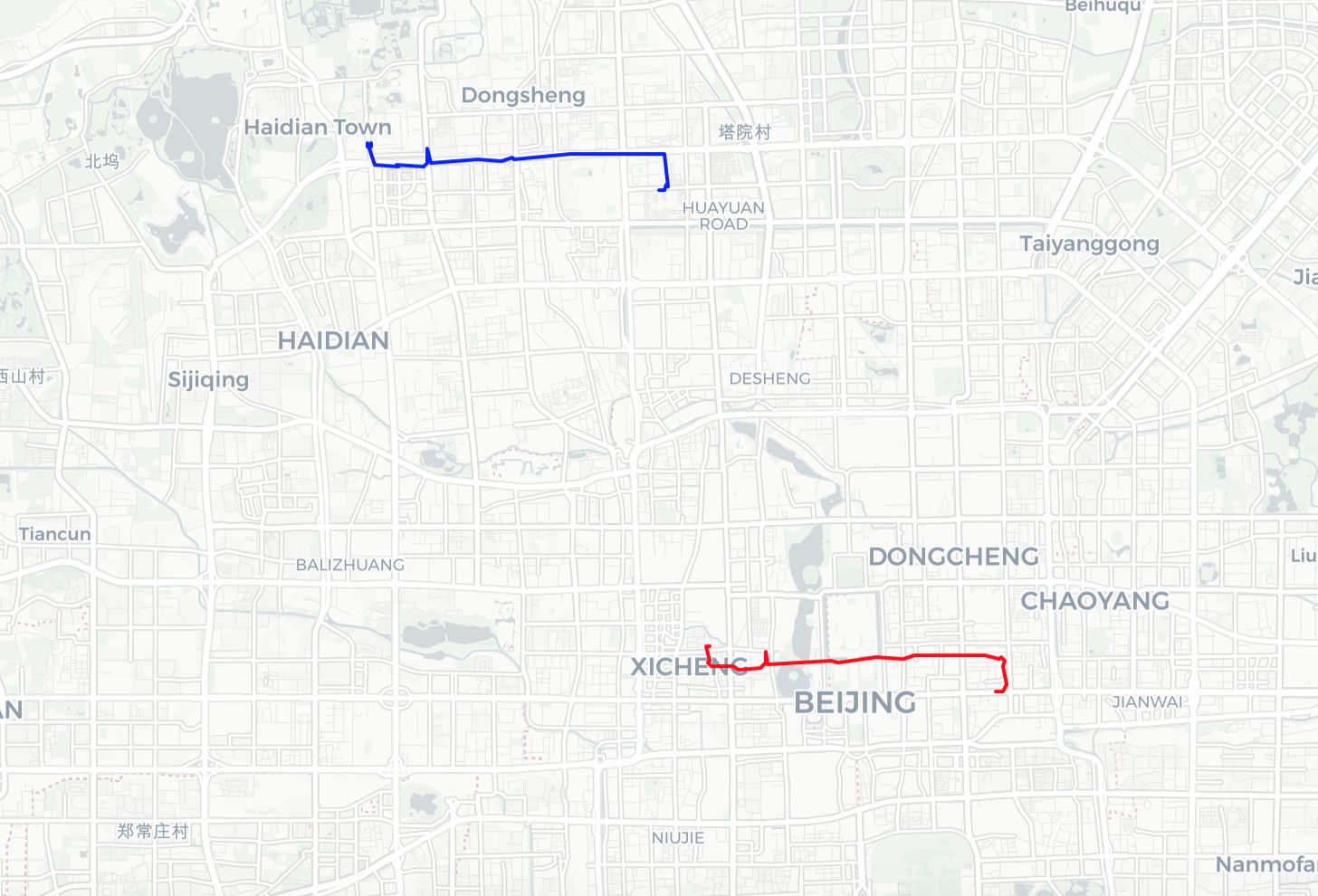

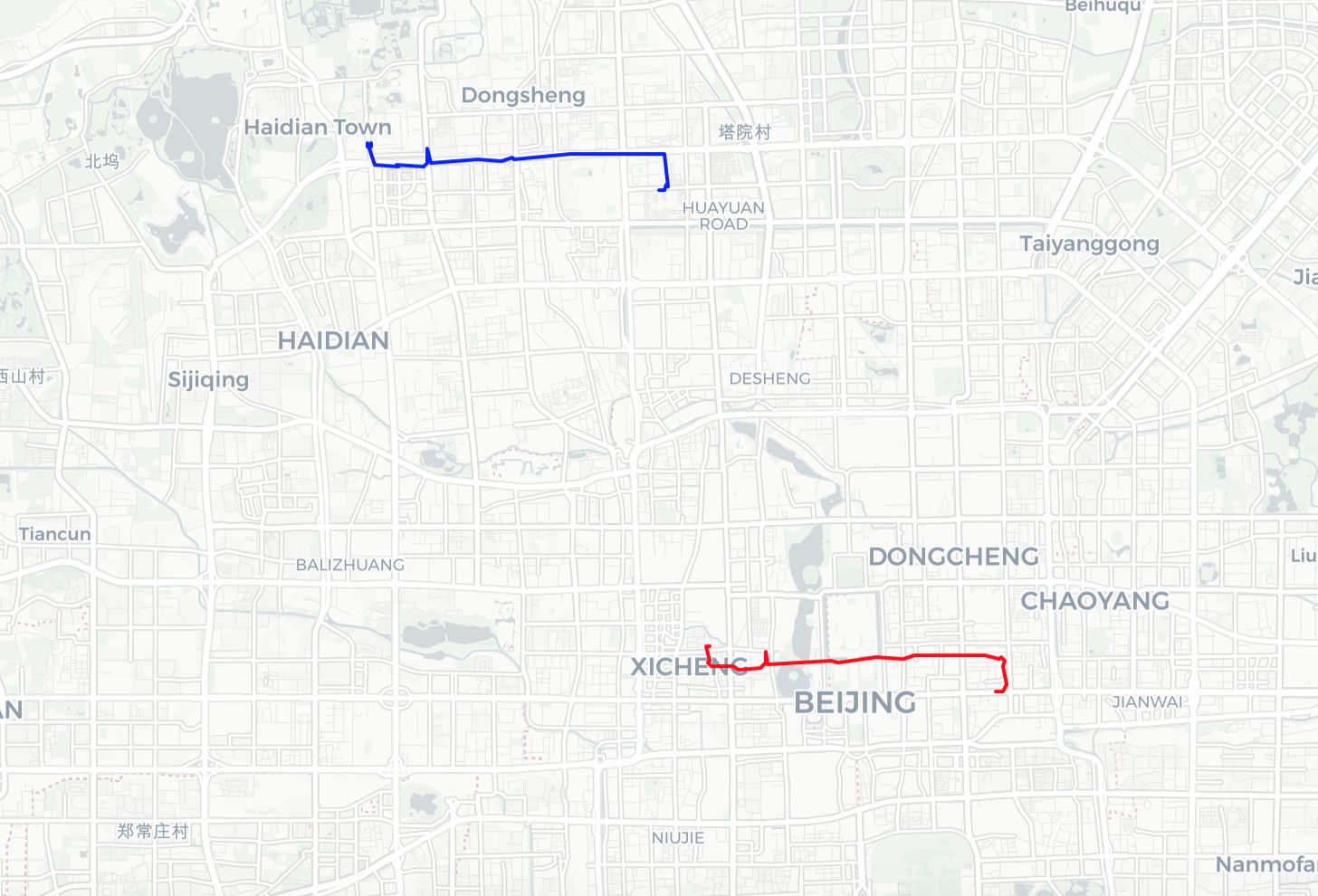

GeoSecure: A Location-Privacy-Aware Framework for Location-Based Services

Do you use GPS-driven LBS like fitness trackers? Your location data can pose serious security and privacy risks. No worries, we have GeoSecure solution for you.

Location-based services (LBS) have become an essential part of everyday life. Smartphones, GPS-enabled devices, autonomous vehicles, and related services are widely used. Examples include cab service apps, navigation maps, fitness trackers, and autonomous vehicles. LBS rely on tracking the user's GPS location, which is stored in the cloud. GPS data can reveal sensitive information about users, such as home and work locations, shopping habits, religious and political affiliations, and health conditions. Therefore, it is crucial to protect this information. The core idea of our research is to design algorithms that provide location-based services without revealing users' locations. This approach ensures the safety of users' information while allowing service providers to offer their services. More details can be found here.

|

Last updated: July 23, 2024.